Version 0.14 is the newest experimental release for Crochet. It fixes some of the most glaring semantic issues in the language, and adds quite a few new packages, with a particular focus on debugging. The changelog summarises all of these.

You can install it from npm as usual:

$ npm install -g @qteatime/crochet@experimental

Since these are experimental pre-releases, do note that there will be many issues (including security issues!) in it. Treat it as a toy, not a finished programming system.

Anyway, let’s talk about the stuff that is included in this version!

In this release

- A new Playground

- New

enumsemantics - Named constructors and extensible types!

- No more dynamic records

- Trace debugging and representations

- Compiler plugins

- Optional capabilities

- New abstractions for effect handlers

- A preview of upcoming packages

- Additions to existing packages

A new Playground

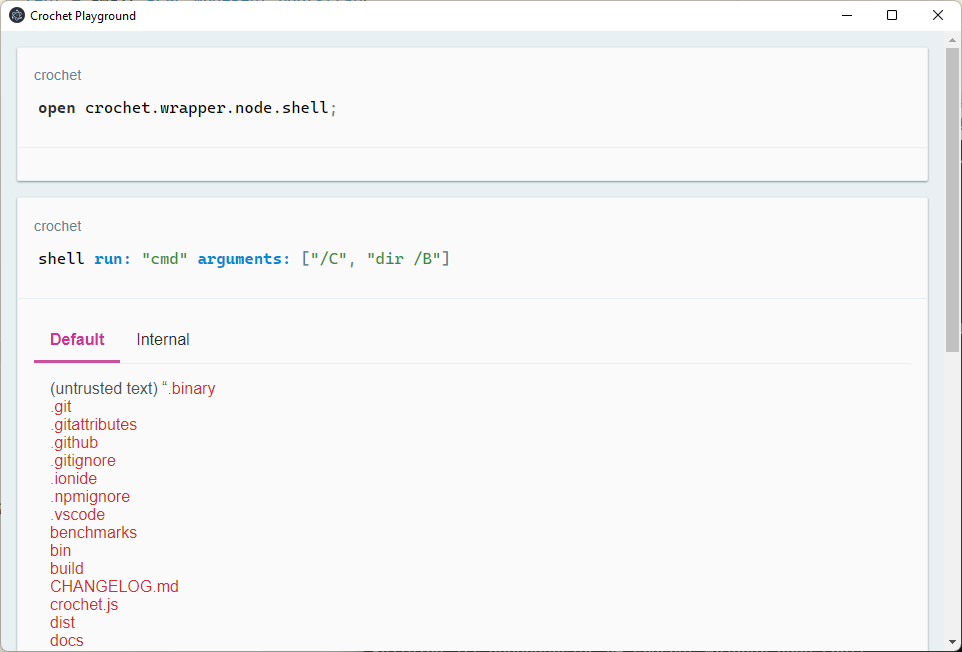

The first experimental release of Crochet shipped with a tool called “Crochet Launcher”, which provided ways of creating new packages and interacting with them—running them, testing them, and interactively programming against them with a REPL.

But the Launcher tool was too ad-hoc in many places. This release does away with the old Launcher tool, and introduces a new, less ambitious Playground tool. The idea of the Playground is to provide an interactive shell (REPL) for any Crochet project, and, in the future, also other code-specific interactions—a graphical debugger and a code browser in the same veins as Smalltalk’s.

A demonstration of the new Playground tool

A demonstration of the new Playground tool

The new Playground supports the same rich output as before—values can use Crochet’s debug representations to define all sorts of ways in which users can look at them.

More interesting, however, is that these same debug representations also

work seamlessly for packages that cannot be run in the Browser. The new

Playground supports Node packages in the same way, you start the playground

with --target node:

$ crochet playground some/node-package/crochet.json --target node

And you can interact with it from your webbrowser in the same way. The Playground RPCs to the kernel process running the Crochet package, and takes care of the serialisation between the two worlds—since debug representations are always serialisable and safe to transfer this means you get the same level of rich debugging as with Browser packages.

Interacting with the OS shell from the Browser

Interacting with the OS shell from the Browser

Be cautious, though! REPLs are intrinsically unsafe—you’re allowing a program to run arbitrary code on your computer, with the capabilities you’ve accepted. For Node playgrounds, that means running arbitrary code with all of your user’s powers.

Crochet’s Playground uses Electron’s built-in RPC mechanism along with an opaque capability to restrict, as much as possible, the execution of this code to the Playground UI. This makes the Playground UI and the Crochet VM both very sensitive trusted applications.

The Playground assumes that nothing can read the opaque RPC capability before the Playground UI has a chance to; that nothing is intercepting your network traffic on

localhost; that Browserify generates correct code; and that both the Electron and the Node codebases are safe to run as user-level processes.This is currently marked as a known security issue, and we do plan to move away from as much of this trusted external base as possible and into verified compilers and codebases instead. But that will take time…

New enum semantics

Previously, enum definitions were not namespaced by default, because

Crochet does not reify a concept of namespaces. In this release we’ve

moved a bit towards reifying that notion. That means that enums are now

always qualified.

That is, if you have something like:

enum direction = north, east, south, west;

The earlier version of Crochet would expand this declaration into the equivalent of:

singleton direction;

singleton north is direction;

singleton east is direction;

singleton south is direction;

singleton west is direction;

The new version, instead, expands it into the equivalent of:

singleton direction;

singleton direction--north is direction;

singleton direction--east is direction;

singleton direction--south is direction;

singleton direction--west is direction;

Along with local aliases for the shorter names:

alias type direction--north as north;

alias type direction--east as east;

alias type direction--south as south;

alias type direction--west as west;

Aliases are currently a module-local concept. This means that, within the

module that defines the alias, you can refer as these types either using

their full name (direction--north) or their short name (north). But

outside of the module, only the full name works.

This release also adds a couple of new commands to enums by default. You

can access all of the enum cases as commands on the static parent type.

That is, #direction north and direction--north refer to the same value.

So if you have an alias definition such as define d = #direction, then

d north would also refer to direction--north.

Because enums are often used to represent textual possibilities from

the outside world, this release also paves the way towards that a little

bit. The command to-enum-text returns the short name of the type as

a trusted piece of text. #enum from-enum-text: _ takes the short name

as a trusted piece of text and converts it to the equivalent enumeration

type.

That is, #direction north to-enum-text would yield "north". And

#direction from-enum-text: "north" would yield direction--north.

The law #enum from-enum-text: (enum to-enum-text) =:= enum applies.

There’s currently no way of disabling these generated commands, or controlling the textual serialisation for them, but that is planned for the future.

Named constructors and extensible types!

Crochet’s new expression was limited to positional arguments before.

This meant that if you had a type definition like:

type padding(

top is numeric,

right is numeric,

bottom is numeric,

left is numeric

);

All construction sites would have its positional form, making it difficult to visualise what exactly you were providing to the type. As well as making extending the type complicated:

new padding(0, 1, 0, 1); // we all love magic numbers, right?

You can now construct types by providing the field names for each value. The runtime ensures the same capabilities as before, and it verifies that the fields provided cover all requirements from the type, as well as not containing any extraneous field:

// this works

new padding(top -> 0, right -> 1, bottom -> 0, left -> 1);

// this doesn't

new padding(top -> 0, bottom -> 0); // missing right and left

// this also doesn't work (`center` isn't a field in this type)

new padding(top -> 0, bottom -> 0, right -> 0, left -> 0, center -> 1);

More excitingly, however, is that having named arguments for the type constructor enables us to support extending existing values with new fields. All values are immutable in Crochet, which leads to the following pains:

let P1 = new padding(top -> 0, bottom -> 0, right -> 1, left -> 1);

// Now we want to modify `P1` to also include padding for top and bottom

// fields, but the only way to do that is to construct a whole new object.

let P2 = new padding(top -> 1, bottom -> 1, right -> 1, left -> 1);

The new extend operation is based on the similar idea in languages like Erlang, and on extensible records as seen in languages like Elm. It allows you to provide a small set of things you want to change in an existing object:

let P1 = new padding(top -> 0, bottom -> 0, right -> 1, left -> 1);

let P2 = new padding(P1 with top -> 1, bottom -> 1);

You may ask: “why do I need to know the type of P1 though?”. And the reason

is really just that Crochet really wants things to be as statically analysable

as possible in these cases because, otherwise, interactive tooling for helping

programmers writing this code can’t really do that much. Type inference could

end up yielding too many types for suggestions to be sensible or useful.

Incidentally this will also help the compiler optimise programs in the future, but that’s not a concern right now.

Another similar addition here is that records now have a proper extend operation as well. It looks similar to the typed one, except there are no types involved, and thus tooling can’t help much here:

let P1 = [top -> 0, bottom -> 0, right -> 1, left -> 1];

let P2 = [P1 with top -> 1, bottom -> 1];

No more dynamic records

Speaking of records, this release cements them as a static construct only.

So they are now exactly the untyped version of Crochet’s typed data—you

cannot have something like: “iterate over all key/value pairs of this record”.

At least not without converting it to a regular map type.

This release also gets rid of the dynamic field projection

(Value.(SomeFieldName), where SomeFieldName should evaluate to a text

with the field name), which was, by mistake, also enabled on typed data

before…

Trace debugging and representations

A lot of the work in this release was on improving the debugging story for Crochet. Programs in Crochet are still quite impossible to debug, mind you, and the effort will continue in the next release, but things have improved a little bit.

The main contributions of this release are the debugging representations, an extensible set of ways of representing Crochet values, and on tracing support, which is needed to empower users to build their own domain-specific debugging tools, and will also power Crochet’s built-in time-travelling debugger.

These two features really work in tandem. We want users to build their own debugging tools, in ways that make sense for their own context, rather than giving them a set of “generic debuggers” and hoping they’ll be able to adapt them to their reality. This means that we need to make “writing a debugger” not only possible, but also reasonably cheap. We’re not there yet, but these two additions are a good step in that direction.

Multiple representations of data

Why do we need this “debugging representations” business anyway? Well, consider, for example, the case where we have a couple of instant values:

type time-range(start is instant, stop is instant);

How do you represent this value if you want to display it to the user? Well, in Node, the answer is this:

> new TimeRange(new Date(2022, 1, 1), new Date(2022, 2, 24))

TimeRange {

start: 2022-01-31T23:00:00.000Z,

stop: 2022-03-23T23:00:00.000Z

}

Here we’re given a textual representation of the date in ISO format, which

is often useful. But what if we’re trying to look for a specific value in

timestamp format? Well, now we have to parse that textual representation

and explicitly ask for .getDate(). Possible, but annoying. What if we

want to check what this date looks like in a calendar? Well, now we will

usually pull up a calendar in a separate program and look for the date

there. Possible, but even more annoying. What if we, then, want to check

how much time this time range comprises? Well, we would hope the object

itself provides a method that tells us this in some useful way, but there’s

no such thing in JavaScript’s Date type; we’d need to build our own.

The point here is not “JavaScript is bad” (other languages have the same problem anyway). The point is that we look at debugging representations when we have certain questions. And the usefulness of these representations change depending on the questions and context that we have. If the language forces a single representation on the user, or if the language makes it difficult to look at a value in a particular way, then users will need to do some additional (possibly without the language’s help) work to achieve what they want. And that sucks!

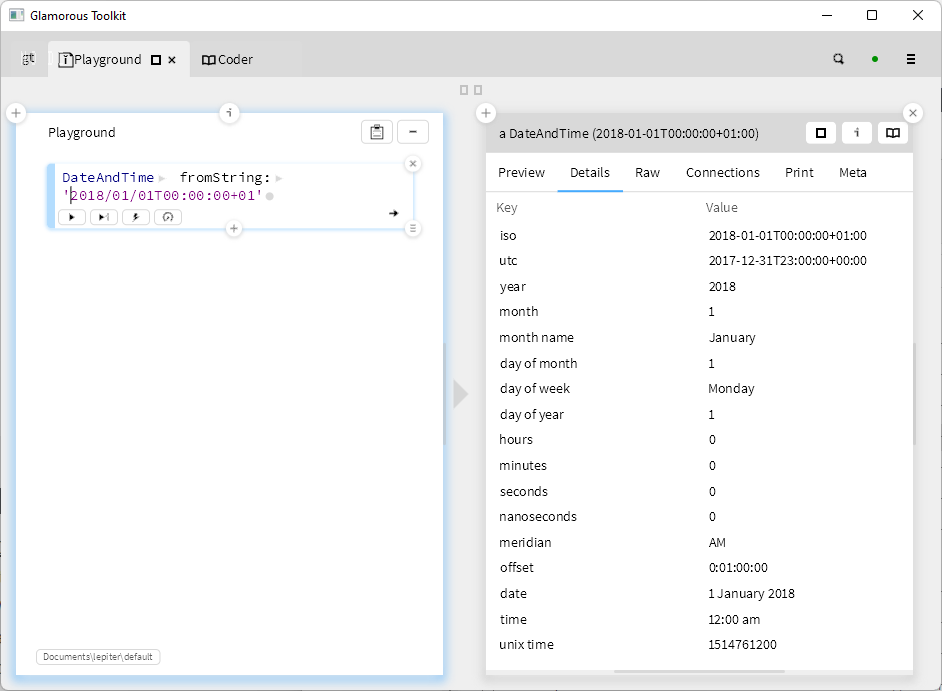

This idea of interacting with values through diffent “lenses” isn’t really new. You see pieces of it in early Smalltalk, in Ungar and Smith’s Us, and in all of the things that emerged from Context-Oriented Programming. And, more recently, you see it in tools like Glamorous Toolkit.

GToolkit showing different representations of a Date

GToolkit showing different representations of a Date

Likewise, in Crochet we can have values provide different ways in which we can look at them. Crochet allows these lenses to be extensible, without any loss to the capability-security-based boundaries, by relying on multi-methods and the Perspective types.

That is, one can construct as many perspective types as needed, and then define representations of each value based on those perspectives. For our time range we could start by defining a few perspectives:

open crochet.time;

type date-perspective is perspective;

type duration-perspective is perspective;

command date-perspective name = "Date and time";

command duration-perspective name = "Duration";

Then defining representations for these perspectives:

command

debug-representation

for: (X is instant)

perspective: date-perspective

do

#document plain-text: (X to-iso8601-text);

end

command

debug-representation

for: (X is duration)

perspective: duration-perspective

do

#document fields: [

days -> #document number: X days,

hours -> #document number: X hours,

minutes -> #document number: X minutes,

seconds -> #document number: X seconds,

] | typed: #duration;

end

command

debug-representation

for: (X is time-range)

perspective: (P is default-perspective)

do

#document fields: [

start -> self for: X.start perspective: new date-perspective,

stop -> self for: X.stop perspective: new date-perspective,

] | typed: #time-range;

end

command

debug-representation

for: (X is time-range)

perspective: (P is duration-perspective)

do

let Duration = X.start until: X.stop;

self for: Duration perspective: P;

end

And by evaluating something of type time-range we’d be able to look

at it from the different perspectives we’ve defined:

The Playground showing time-range as a structured table of dates, and as the duration between the two dates

The Playground showing time-range as a structured table of dates, and as the duration between the two dates

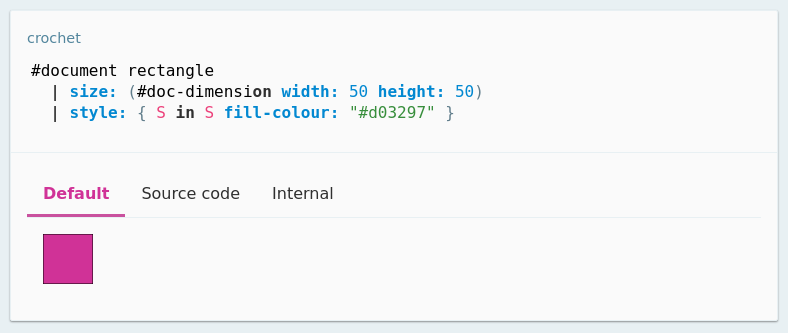

The representations are not restricted to text and tables. Documents can also be SVG-inspired shapes, which means that we could have colour types be represented as follows:

A square filled with the colour that we’re representing

A square filled with the colour that we’re representing

Indeed, the Crocheted Adventure tutorial book relies on these rich documents to create a “roleplaying game-like” experience for people following along the book’s examples in the Playground:

An example of domain-specific debugger from the Crocheted Adventure book

An example of domain-specific debugger from the Crocheted Adventure book

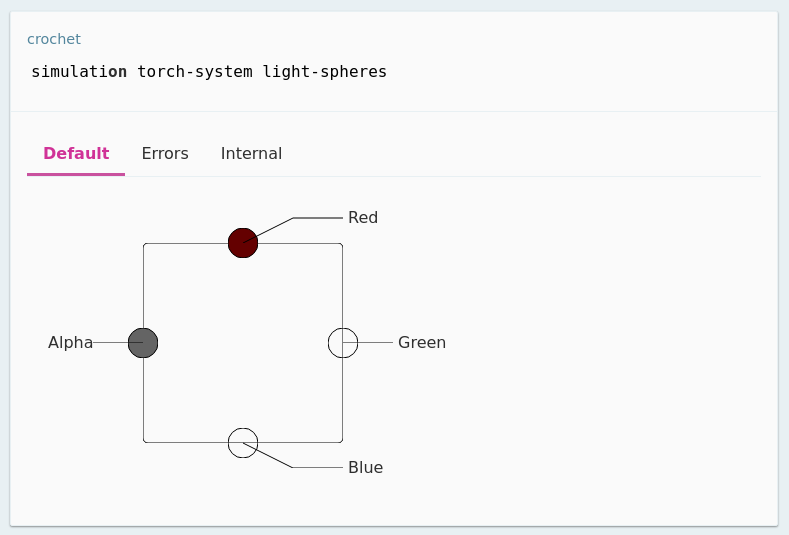

Tracing support and time-travelling

A more exciting piece of this, however, is that you can combine these domain-specific debuggers with the Tracing package to create your own domain-specific time-travelling debuggers!

For example, suppose you’re writing a video game. You might represent the player character in the game with a type like this:

type player(

x is cell<integer>,

y is cell<integer>,

gravity is integer,

speed is integer

);

Then, we may give the player an update command that is called each frame to respond to the game’s physics and player’s input:

command player update do

let Speed = condition

when keyboard is-held: #key right => self.speed;

when keyboard is-held: #key left => self.speed negate;

otherwise => 0;

end;

let Gravity = condition

when self is-touching-the-ground => 0;

otherwise => self.gravity;

end;

self.x <- self.x value + Speed;

self.y <- self.y value + Gravity;

end

When playing the game, we might notice something odd going on with the player movement. And it might be a bit overwhelming to do so while playing the game and worrying about all of the little creatures coming at the player with murderous intent in their eyes; so it might be useful to record a play session and look at it from different angles.

You could just record a video, of course. But with Crochet it doesn’t take

that much effort to record what’s happening with the game and build a debugger

for this specific case. For example, here we could instrument the update

command to trace some information—similar to what you’d do with logging in

other languages:

open crochet.debug;

singleton player-update;

command player update do

// (...same as before...)

transcript tag: player-update inspect: [

x -> self.x value,

y -> self.y value,

speed -> Speed,

gravity -> Gravity

];

end

The primary difference from logging libraries you might be familiar in other languages is this “tag” argument. Here it really is just a way of categorising this log entry, and nothing else. It can be any value, but using a singleton type makes it trivial to guarantee that you have an unique tag in the log.

Next, we need to record the execution. Your game would probably have an entry point, we can use the Tracing package to record everything happening from within that entry-point. So we open the Tracing package:

open crochet.debug.tracing;

And we run the game (we don’t really need to modify anything else), but we

run it wrapped in the trace record: _ in: _ command:

let Trace =

trace record: (#trace-constraint has-tag: player-update) in: {

game run;

};

And that’s it! We now have a complete record of the game as the player

plays it. Well, not “complete” as in “we’ve recorded every single thing

happening in the VM”. We could do that, but it’s often too expensive and

unecessary. What we’re doing here is recording a specific thing instead,

the trace constraint there says we’re only interested in log messages that

have been tagged with player-update. This is likely to keep trace sizes

far more reasonable.

There’s a problem here, though. We’ve recorded all log messages, but they’re still just a list of these values we’ve logged. Looking at logs isn’t really a good use of anyone’s time. And it’s particularly unhelpful when we’re trying to understand how the movement feels. Thankfully, traces are just regular data structures, and we can manipulate them however we please with Crochet code.

Here, we can combine these traces with documents to present them in a way

that lets us achieve this time-travelling debugger we want. Crochet even

provides a document specifically for this: timeline takes in a list of

documents and presents it in a way that you can step forwards and backwards

in time. So our little debugger can be written like this:

Trace events

| map: { Event in

let Data = Event value as record;

#document fixed-width: 500 height: 300 with: [

#document rectangle

| origin: (#doc-point2d x: Data.x y: Data.y)

| size: (#doc-dimension width: 30 height: 50)

| style: { S in S fill-colour: "#d03297" },

#document plain-text: "Speed: [Data.speed to-text]"

| x: 5 y: 5,

#document plain-text: "Gravity: [Data.gravity to-text]"

| x: 5 y: 30

]

}

| timeline;

That’s the whole debugger. We get all of the events (which only includes

the player update logs in this case), transform them into a small rendered

frame with the information we’re interested in, then use the _ timeline

command to present it as an interactive timeline. The result is this:

An example of the timeline-based document for traces

An example of the timeline-based document for traces

For full traces, it should be possible to simply record all activity in the VM and rely on effects to provide both accurate replay and the possibility of speculative backwards and forwards execution; Crochet programs should be pure for everything outside of effect handlers. In essence, this would mean no additional effort in recording and replaying an entire game (including changing code in it during replay and hitting entirely new codepaths) from the programmer’s perspective.

Imagine tools like rr or replay for any Crochet program, in any Crochet language, without special support from the programmer, and also not restricted to deterministic replaying. That’s the big goal of Crochet’s time-travelling support :)

Compiler plugins

This release adds a really minimal support for compiler plugins, which in this implementation look more like your usual procedural macros. In Crochet, a compiler plugin is a function that takes in a Codebase, does some queries or transformations to it, and returns a new Codebase.

The way this is planned to the future is based on a logical database for code, which would then allow any transformations applied by these plugins to be done incrementally, or undone if needed (e.g.: because the user decided to rollback or remove pieces of code). But we’re not there yet, and there’s a lot of work to be done in order to get there.

This release limits the plugins to local transformations, and only allows

plugins included in the VM itself. The two plugins currently available are

derive: "equality" and derive: "json". The first one automatically

implements structual equality for a data-only type:

@| derive: "equality"

type point2d(x is integer, y is integer);

test "Point2d has equality" do

let P1 = new point2d(1, 2);

let P2 = new point2d(1, 2);

let P3 = new point2d(2, 1);

assert P1 === P2;

assert P2 =/= P3;

end

The second one implements custom JSON serialisation and parsing (explained later in this page). These can be combined, they’re applied in sequence from inner to outer—and this means that outer transformations can actually change declarations introduced by inner transformations:

@| derive: "json"

@| derive: "equality"

type point2d(x is integer, y is integer);

test "JSON parsing" do

let Domain = #json-serialisation defaults

| tag: "point2d" brand: #point2d;

let Json = #extended-json with-serialisation: Domain;

assert

Json parse: (Json serialise: [new point2d(1, 2), new point2d(2, 1)])

=== [new point2d(1, 2), new point2d(2, 1)];

end

Optional capabilities

Packages can, now, also define optional capabilities. They need to do so because Crochet requires capabilities and dependencies to be statically known for tooling purposes. So if a package does not really need a certain capability to work, but would be able to provide additional features if granted that, they could specify this as an optional capability.

If the user chooses to provide the capability, the package can then load additional code based on its presence, or fallback to a minimal version of itself in its absence.

This gets rid of the earlier problem of packages requesting capabilities only to pass them around—which is not something you can see from the package’s definition, and code audits are also not very useful if you allow (unchecked) upgrades to the package’s code. This takes care of both of those issues, but there are still some problems left to address in the area of granting transient capabilities that needs a bit more of experiments.

New abstractions for effect handlers

Effect handlers have now fully diverged from its original algebraic origins, and resemble a lot more early Smalltalk and Lisp’s Signals/Conditions system. Without the more powerful retry mechanisms, however, since Crochet’s effects are not meant to be used only for exceptional situations.

This one is covered at length in the reference docs, but it amounts to moving away from things like:

effect non-local with

'return(value);

end

singleton non-local;

command non-local handle-in: (Block is (() -> A)) -> A do

handle

Block();

with

on non-local.return(X) => return X;

end

end

command list find: (Predicate is (A -> boolean)) do

non-local handle-in: {

for X in self if Predicate(X) do

perform non-local.return(X);

end

nothing;

};

end

To:

effect non-local with

'return(value);

end

handler non-local-return with

on non-local.return(X) => return X;

end

command list find: (Predicate is (A -> boolean)) do

handle

for X in self if Predicate(X) do

perform non-local.return(X);

end

nothing;

with

use non-local-return;

end

end

It might not sound like much, but this simplifies using and reusing effects and handlers quite a lot. Especially when you need to install a bunch of them—which, given the way Crochet encourages you to use effects because every piece of Crochet’s tooling breaks if you choose not to… well, that happens quite often :’)

A preview of upcoming packages

This release adds some new, but quite unfinished, packages to the standard library, covering concurrency, networking, and UI.

Concurrency primitives

The specifics of the concurrency model for Crochet aren’t set in stone yet. That said, Crochet aims to be a cooperative (coroutines-based) concurrent language, with preemptive concurrency only available through “Zones”. These are fully isolated processes that can be pinned to different locations, including remote computers, and local or remote zones should work transparently.

If you’re not very familiar with E’s concept of Vats, there’s some additional explanation that’s in order here. Zones (and E’s Vats) are pretty much a “group of actors” with a very specified boundaries. In Crochet, communication within a Zone (i.e.: actor A sending a message to actor B when they’re both in the same Zone) can be done synchronously, can share memory, and is bound to cooperative concurrency semantics (that is, they run in the same thread, and each actor is expected to cooperate on yielding its execution time).

Communications between zones is, however, always asynchronous. Zones are entirely isolated, so if actors of different Zones interact then messages must be serialised and copied over. With capability types, this means placing strong capabilities through this serialisation, but not sending the object or data—the other side will have a “proxy” for interacting with the original source. This differentiation allows Zones themselves to be local (in a different thread or processor core), or remote (in a different computer), without making this distinction really apparent in the program.

That said, Zones are pretty much a sketch in Crochet currently, and the VM does not implement them in any useful way yet.

What is currently implemented is a series of primitives:

-

Deferreds and Promises: these currently provide direct access to the underlying JavaScript VM’s promises mechanism. This will likely change soon, as JS’s promise semantics are too complex.

Waiting a promise always causes the current execution process to yield to other scheduled processes. The Crochet VM is not currently managing these (it inherits the microtask handling from the JS VM), which causes some odd issues every now and them.

-

CSP Channels: these provide something similar to Clojure’s core.async take on Hoare’s Communicating Sequential Processes formalism. In Crochet, channels always have a buffer, which moves it away from CSP’s synchronisation semantics. This might be revisited in the future.

-

Event streams: these are pretty much the same as Rx’s Observables. A discrete, push-based stream of events with a functional API on top. Along with observable cells they form a basis for reactive, incremental programs.

-

Observable Cells: these are more akin to variables you might find in data-flow languages like Lucid or Flapjax. An observable cell is a value that changes over time, but that also provides a cursor into a certain point of this discrete series of values—here generally the last value in the series. Its semantics are still push-based, which is essential for reactive, incremental programs. These cells form the basis of all reactive UI work in Crochet.

-

Actors: the primary concurrency model in Crochet is supposed to be actor-based; that’s not yet the reality, but this preview implementation at least hints at some of the ideas there.

Crochet’s actors are a bit odd-looking, however, because they’re mostly based on Erlang’s and Session Types’ takes on actor implementations. In an object-oriented world, this means that Actors are really a proxy to a set of “behaviours”, and the actor may change its backing behaviours as it handles messages.

For example, consider needing to implement the following capability-based protocol:

service account {

authenticate(token: secret<text>) -> authenticated-account;

}

service authenticated-account {

withdraw(n: number) -> authenticated-account;

deposit(n: number) -> authenticated-account;

}

In Crochet this would mean you have two sets of behaviours: account, which

only allows authentication messages; and authenticated-account, which allows

operations on a specific account. The implementation, then, follows this

distinction. We first start with defining the protocol possibilities, type-wise:

abstract account-state is actor-state;

singleton account-state-base is account-state;

type account-state-authenticated(account is account)

is account-state;

abstract account-message-base is actor-message;

type account-message-authenticate(token is secret<text>)

is account-message-base;

abstract account-message-authd is actor-message;

type account-message-withdraw(n is integer) is account-message-authd;

type account-message-deposit(n is integer) is account-message-authd;

Then we define the possibilities behaviour-wise:

command account-state-base accepts: (M is account-message-base) = true;

command account-state-base handle: (M is account-message-authenticate) do

let Account = perform account.retrieve(package unseal: M.token);

self transition-to: new account-state-authenticated(Account);

end

command account-state-authenticated accepts: (M is account-message-authd) =

true;

command

account-state-authenticated handle: (M is account-message-withdraw)

do

self.account withdraw: M.n;

self done;

end

command

account-state-authenticated handle: (M is account-message-deposit)

do

self.account deposit: M.n;

self done;

end

To create an actor we spawn it within a particular zone, specifying its initial behaviour:

let Actor = root-zone spawn: account-state-base;

Then, if we try to withdraw before we authenticate, nothing can happen,

because account-state-base does not handle such messages:

Actor send: new account-message-withdraw(100);

// nothing happens

This enforcement of state machine transitions in actors is pretty much lifted from Erlang’s gen_statem, but as you can see here the syntax gets pretty unwieldy quite quickly. It’s likely that, in the future, there will be a specific DSL for actors in Crochet. I have previously played around with this in a tiny actor-based object oriented language called “smol”, so most of the inspiration for the syntax might come from there.

Here’s what the previous example would look like in smol:

object account where

on authenticate: Token =

let Account = accounts retrieve: Token,

authenticated-account for: Account.

end

object authenticated-account for: Account where

on deposit: N =

Account deposit: N,

nothing.

on withdraw: N =

Account withdraw: N,

nothing.

end

to main: Arguments =

let Actor = spawn account,

Actor

| authenticate: "1"

| deposit: 100

| withdraw: 10.

A reactive UI framework

Agata is the new cross-platform UI framework, based on a similar reactive model to those of data-flow languages like Flapjax. That said, this release only includes support for DOM-based rendering, and there’s still a lot of work to be done on widgets and automatic lifting.

Probably the best way of looking at Agata right now is to check the examples in the repo. There’s a complete to-do list application, a small chat application based on websockets, and a work-in-progress widget gallery.

But here’s a very small example:

open crochet.concurrency;

open crochet.ui.agata;

command main-html: Root do

handle

let Name = #observable-cell with-value: "";

agata show: (

#widget flex-column: [

#widget text-input

| placeholder: "What's your name?"

| value: Name,

"Hello, [Name]"

]

| gap: (1 as rem)

| align-items: #flex-align center

| justify-content: #flex-justify center

| with-padding: { P in P all: (2 as rem) }

| fill-screen

);

with

use agata-dom-renderer root: Root;

end

end

Give this the crochet.ui.agata/ui-control capability and run it in a

web-browser to see the "Hello, ..." text piece updating automatically

as the text in the text input changes.

It’s quite possible that the bi-directional use of observable cells here will be replaced (once again) with an uni-directional one with reference types to provide a way of propagating the values. It does lead to confusing behaviour in some cases, but that’s something I’m still experimenting with.

HTTP and WebSockets support

These are currently exposed in the WebAPI wrapper library, however they do a lot more than just wrapping the underlying functionality so they’ll quite possibly be moved to their own library in the next version.

Here’s an example of using the HTTP client (keep in mind that this is

still subject to all restrictions Browser’s fetch API is):

open crochet.wrapper.browser.web-apis;

let Token = package seal: "my-secret";

http with-real-client: {

#http-request url: "http://some.domain.with.cors/api/read"

|> _ post: (#http-body json: [username -> "me"])

|> _ headers: { H in

H at: "Authorization" put-sensitive: "Bearer [Token]"

}

|> http send: _

|> _ body

|> _ json

};

There’s some rudimentary support for handling sensitive headers appropriately here, though response headers are not handled yet.

WebSockets has a simpler API and is already using the new handlers feature:

open crochet.concurrency;

open crochet.wrapper.browser.web-apis;

handle

let Ws = #websocket open: "ws://localhost:8000";

Ws connect wait;

Ws send: "ping";

let Got-pong = #deferred make;

Ws listener

| keep-if: {Msg in Msg is websocket-message-received }

| subscribe: { Msg in

condition

when Msg message =:= "pong" => #deferred resolve: Msg;

otherwise => nothing;

end

};

Got-pong wait;

Ws close;

with

use real-websocket-handler;

end

A safe URL type

In order to make network packages safer, a new URL type is provided, which handles parsing, serialisation, and composition of URLs in ways that preserves its proper semantics.

For example:

open crochet.network.types;

command app base-url =

#url from-text: "http://domain.com/api";

command category show-url =

(app base-url / "products" / self.id / "list")

| with-query: { Q in Q at: "direction" put: "ASC" };

Here, app base-url creates a base URL for the API. And categories create

URLs that look like {base url}/products/{id}/list?direction=ASC, but making

sure that none of these dynamic components can subvert the expected semantics

at its context.

Template URLs are likely going to be added soon, since the syntax here becomes unwieldy quite fast as well.

Additions to existing packages

Custom JSON serialisation

This release provides the JSON package with an “extended JSON” format, which can be used to serialise and parse arbitrary Crochet values. You do need to specify which values and how to serialise them, however, as otherwise you’d subvert all Crochet’s security mechanisms.

For example, say I have an application with the following type:

type point2d(x is integer, y is integer);

I can implement the to-json and from-json traits for it:

open crochet.language.json;

implement to-json for integer;

command json-serialisation lower: (X is point2d) =

#json-type tag: #point2d value: (self lower: [x -> X.x, y -> X.y]);

implement from-json for #point2d;

command json-serialisation reify: (X is map) tag: #point2d =

new point2d(X at: "x", X at: "y");

And then define a specialised serialisation for it:

let Domain =

#json-serialisation defaults

| tag: "point2d" brand: #point2d;

let Json = #extended-json with-serialisation: Domain;

From there on, I have the guarantee that

Json parse: (Json serialise: X) === X, for any X included in my Domain.

For example:

Json serialise: [new point2d(1, 2), new point2d(3, 4)]

|> Json parse: _

|> _ === [new point2d(1, 2), new point2d(3, 4)];

With compiler plugins this is a lot less work for data-only types (you should never automatically derive serialisation for capability-bearing types!):

@| derive: "json"

type point2d(x is integer, y is integer);

@| derive: "json"

type point3d(x is integer, y is integer, z is integer);

define domain = lazy (

#json-serialisation defaults

| tag: "point2d" brand: #point2d

| tag: "point3d" brand: #point3d

);

define my-json = lazy (

#extended-json with-serialisation: Domain

);

The particular custom JSON format that the package uses is that, all values that are not translatable to basic JSON types, end up as:

{

"@type": "<what you've decided as `tag`>",

"value": (whatever `json-serialisation lower: _` returns)

}

Because values are tagged with their types in the serialisation, you can recursively serialise any data in the serialisation domain, and later parse it, without needing a specific schema.

The secret type

Handling sensitive data and making sure it doesn’t leak is hard. Even when you have a capability secure language (mostly because capabilities don’t usually deal with data flow in that way). Security type systems have treated these as principals, and earlier work has done this using dynamic wrapping. I gave a talk exactly about both of these last year.

This release includes a secret type with a single principal, denoted by

the seal capability. Secrets are constructed with a particular seal, and

they can be opened as long as you have that same seal. This leads to the

following pattern (which might just become a default package functionality

going forward):

local define seal = #secret-seal description: "my seal";

command package seal: Value = #secret value: Value seal: (force seal);

command package unseal: (Value is secret) = Value unseal: (force seal);

Then you can pass secret data around that you have the guarantee will not be read by any unintended party, and will not accidentally end up in a sink you didn’t expect (e.g.: a disk or network log).

The VM does not handle secret data yet, so things like memory dumps and debuggers will still have access to it. Secret only protects your data from other Crochet code, not from powerful external system features!